Google will now require verified election advertisers to prominently disclose any use of artificial intelligence or digitally altered content in their ads.

The rule will also apply to YouTube video ads.

The new policy comes after Florida Governor Ron DeSantis' campaign faced severe backlash for using a fake image generated by artificial intelligence to attack former President Donald Trump.

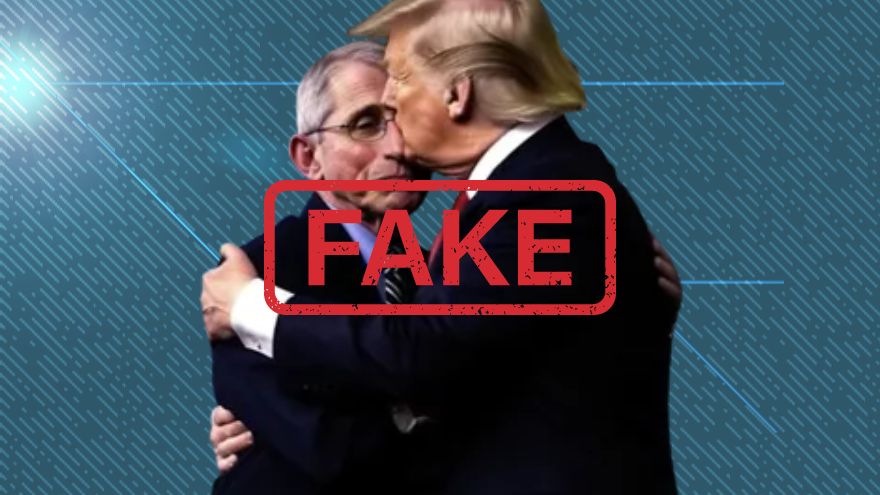

The fake images were part of an ad shared by “DeSantis War Room” on Twitter on June 5th, attacking the former president for not firing Dr. Anthony Fauci while he was in office. Donald Trump became a household name by FIRING countless people *on television*

But when it came to Fauci... pic.twitter.com/7Lxwf75NQm

— DeSantis War Room 🐊 (@DeSantisWarRoom) June 5, 2023

The deepfake images appear in a collage of six photos of Trump and Fauci together — three were real, and three were fake.

"It was sneaky to intermix what appears to be authentic photos with fake photos, but these three images are almost certainly AI generated," Hany Farid, a professor at the University of California, Berkeley and expert in digital forensics, misinformation and image analysis, told AFP, who fact-checked the ad.

On Wednesday, Google announced that beginning in November, campaigns must "prominently disclose when their ads contain synthetic content that's been digitally altered or generated and depicts real or realistic-looking people or events... inclusive of AI tools."

"This update builds on our existing transparency efforts — it'll help further support responsible political advertising and provide voters with the information they need to make informed decisions," Michael Aciman, a Google spokesperson, said in a statement, according to a report from Axios.

Google specified that the disclosure must be "clear and conspicuous" and in a place where viewers can see it. They must also explain which parts are artificial, for example "this audio was computer generated" or "this video content was synthetically generated."

The new policy contains an exemption for content that is "inconsequential to the claims made in the ad." This includes editing techniques like cropping or background edits that do not create realistic misrepresentations.

"Ads that depict someone saying or doing something they never said or did, or that alter footage of a real event, will fall under the new policy," Axios reported.

Campaigns or advertisers who repeatedly violate the rules will be suspended from using Google Ads.

Politico reports that the Federal Election Commission hasn’t set rules on using AI in political campaign ads, but "in August it voted to seek public comments on whether to update its misinformation policy to include deceptive AI ads."

"Facebook currently doesn’t require the disclosure of synthetic or AI-generated content in its ads policies. It does have a policy banning manipulated media in videos that are not in advertisements, and bans the use of deepfakes," the report noted.